Hadoop is a powerful framework that enables distributed processing of large datasets. It follows the MapReduce paradigm. Computation is broken down into independent map and reduce tasks. These tasks run across multiple machines.

However, setting up a Hadoop cluster from scratch can be challenging. It is especially difficult when dealing with infrastructure. Resource allocation and job execution add to the challenge. AWS Elastic MapReduce (EMR) simplifies this process by allowing us to spin up a managed Hadoop cluster with minimal effort.

In this post, we will walk you through each step. You will set up a fully functional Hadoop cluster on AWS EMR. Then, you will run a MapReduce job on a simple dataset. By the end of this post, you will have a clear understanding of how AWS EMR works. You will also learn how to manage clusters efficiently.

Setting Up the AWS CLI

Check AWS CLI version

Check your AWS cli version using the following command:

aws --version

aws-cli/2.24.10 Python/3.12.9 Linux/6.8.0-52-generic exe/x86_64.ubuntu.22Install AWS CLI

If you do not have aws cli installed you can install it on a Ubuntu machine using the following command on the command prompt:

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/installThis will install AWS CLI in the default location (/usr/local/bin/aws).

Update AWS CLI

If you aws cli is bit outdated you can download the aws cli and then update your aws cli using the following command:

sudo ./aws/install --updateMapReduce is a powerful distributed computing model that enables large-scale data processing by breaking it into smaller tasks. It consists of two core functions:

- Map Function: Processes input data and transforms it into key-value pairs.

- Reduce Function: Aggregates and processes these key-value pairs to produce the final output.

For this hands-on exercise, we will use two Python scripts—mapper.py and reducer.py—to implement the map and reduce operations. These scripts will process an input file named input.txt, which contains the following line:

“Hello World! You are Cruel, Bye World!”

To execute a Hadoop MapReduce job, we need to store the input data and Python scripts in a central location. This location must be accessible by AWS EMR (Elastic MapReduce). The best way to achieve this is by using Amazon S3 (Simple Storage Service). It provides scalable and durable cloud storage.

Preparing the S3 Bucket

We will first create a new S3 bucket called big-data-bucket-02 to store our MapReduce scripts and the input file, input.txt.

Create S3 bucket

To create the bucket issue the following AWS command on the console:

$ aws s3 mb s3://big-data-bucket-02

make_bucket: big-data-bucket-02Once you have run the above command you can check if the intended bucket was create in S3 or not using the following command:

$ aws s3 ls

2025-02-24 20:27:01 big-data-bucket-02It is listing the newly created bucket in the S3 storage. Now that the bucket is created we can push our scripts into the bucket. So, first download the mapper.py and reducer.py from the following git repository and save it in a known location:

mapper.py and reducer.py.

I have kept these scripts in a local folder called BigData as below:

~/AWS/BigData$ ls -la

total 20

drwxrwxr-x 2 vivekb vivekb 4096 Feb 24 19:55 .

drwxrwxr-x 4 vivekb vivekb 4096 Feb 24 19:53 ..

-rwxrwxr-x 1 vivekb vivekb 187 Feb 24 19:50 mapper.py

-rwxrwxr-x 1 vivekb vivekb 460 Feb 24 19:50 reducer.pyAlso, create an input text file called input.txt in the same directory which should have the content as discussed in the previous section.

Upload the Python Scripts (Mapper & Reducer) to S3

To upload the scripts to the S3 bucket run the following commands from the Ubuntu console as below:

$ aws s3 cp mapper.py s3://big-data-bucket-02/

upload: ./mapper.py to s3://big-data-bucket-02/mapper.py

$ aws s3 cp reducer.py s3://big-data-bucket-02/

upload: ./reducer.py to s3://big-data-bucket-02/reducer.py Upload the Input File to S3

Next we have to upload the input file called input.txt (that’s the name we are using in this exercise but you can name it anything else you like). Also, to better organise we will upload the input text file to a S3 location called input. To do that issue the following command on the console inside the directory where your input.txt file is located:

$ aws s3 cp input.txt s3://big-data-bucket-02/input/

upload: ./input.txt to s3://big-data-bucket-02/input/input.txt Verify the Uploaded Files

To verify the uploaded file we can use the s3 list command as below:

aws s3 ls s3://big-data-bucket-02/

PRE input/

2025-02-24 20:39:10 187 mapper.py

2025-02-24 20:39:21 460 reducer.pyAnd also list inside the input forder of the S3 bucket:

aws s3 ls s3://big-data-bucket-02/input/

2025-02-24 20:57:15 39 input.txtWhich means our S3 bucket is now holding the scripts and the input file as we expected.

Setting Up IAM Roles and Permissions

When running an EMR cluster, AWS needs permissions to perform the following actions:

Manage EC2 Instances

- EMR launches EC2 instances to process data using Hadoop.

- These instances need permissions to communicate with each other and to read/write data from S3.

Access S3 Buckets

- EMR reads input data from S3 and stores job results back in S3.

- It also needs permission to fetch the Mapper & Reducer scripts.

Write Logs for Monitoring & Debugging

- EMR logs execution details to S3 for job tracking and debugging.

- Without logging, debugging failed jobs becomes difficult.

For this exercise we need to create three different IAM roles.

IAM Roles Required for AWS EMR

EMR Cluster Role (EMR_DefaultRole)

- This service role allows EMR to launch and manage EC2 instances.

- It is assumed by Amazon EMR itself when the cluster starts.

EMR EC2 Role (EMR_EC2_DefaultRole)

- This role is assigned to each EC2 instance in the cluster.

- It grants permissions for EC2 instances to read/write to S3, interact with EMR, and generate logs.

EMR Auto Scaling Role (EMR_AutoScaling_DefaultRole)

- If Auto Scaling is enabled, this role allows dynamically adding/removing EC2 nodes to optimize costs and performance.

Creating IAM Roles for EMR Manually

AWS provides a command to create default roles if they don’t exist:

aws emr create-default-rolesHowever, to understand IAM setup, let’s create them manually:

Create the EMR Cluster Role (EMR_DefaultRole)

This role allows EMR to create and manage resources:

aws iam create-role --role-name EMR_DefaultRole \

--assume-role-policy-document '{

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": { "Service": "elasticmapreduce.amazonaws.com" },

"Action": "sts:AssumeRole"

}]

}'

{

"Role": {

"Path": "/",

"RoleName": "EMR_DefaultRole",

"RoleId": "AROAV3QSPQY5YCINQMWHO",

"Arn": "arn:aws:iam::402691950139:role/EMR_DefaultRole",

"CreateDate": "2025-02-24T21:09:14+00:00",

"AssumeRolePolicyDocument": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "elasticmapreduce.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

}

}Now, attach permissions to this role:

aws iam attach-role-policy --role-name EMR_EC2_DefaultRole \

--policy-arn arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceforEC2RoleCreate the EMR EC2 Role (EMR_EC2_DefaultRole)

This role allows EC2 instances within the EMR cluster to access S3 and other AWS services:

aws iam create-role --role-name EMR_EC2_DefaultRole \

--assume-role-policy-document '{

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": { "Service": "ec2.amazonaws.com" },

"Action": "sts:AssumeRole"

}]

}'

{

"Role": {

"Path": "/",

"RoleName": "EMR_EC2_DefaultRole",

"RoleId": "AROAV3QSPQY5VJD75B34G",

"Arn": "arn:aws:iam::402691950139:role/EMR_EC2_DefaultRole",

"CreateDate": "2025-02-24T21:12:10+00:00",

"AssumeRolePolicyDocument": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

}

}Create an Instance Profile for EMR EC2 Role

- AWS EC2 instances require an instance profile to assume an IAM role.

- Without this, the role cannot be assigned to EC2 instances inside the EMR cluster.

Create an Instance Profile and attach the EMR EC2 role:

aws iam create-instance-profile --instance-profile-name EMR_EC2_DefaultRole

aws iam add-role-to-instance-profile --instance-profile-name EMR_EC2_DefaultRole --role-name EMR_EC2_DefaultRole

{

"InstanceProfile": {

"Path": "/",

"InstanceProfileName": "EMR_EC2_DefaultRole",

"InstanceProfileId": "AIPAV3QSPQY55JS75N3NL",

"Arn": "arn:aws:iam::402691950139:instance-profile/EMR_EC2_DefaultRole",

"CreateDate": "2025-02-24T21:13:22+00:00",

"Roles": []

}

}Create the EMR Auto Scaling Role

If Auto Scaling is enabled, this role allows EMR to dynamically add/remove EC2 nodes:

aws iam create-role --role-name EMR_AutoScaling_DefaultRole \

--assume-role-policy-document '{

"Version": "2012-10-17",

"Statement": [{

"Effect": "Allow",

"Principal": { "Service": "elasticmapreduce.amazonaws.com" },

"Action": "sts:AssumeRole"

}]

}'

{

"Role": {

"Path": "/",

"RoleName": "EMR_AutoScaling_DefaultRole",

"RoleId": "AROAV3QSPQY5SZ3MNPLY4",

"Arn": "arn:aws:iam::402691950139:role/EMR_AutoScaling_DefaultRole",

"CreateDate": "2025-02-24T21:14:13+00:00",

"AssumeRolePolicyDocument": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "elasticmapreduce.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

}

}Attach the Auto Scaling permissions:

aws iam attach-role-policy --role-name EMR_AutoScaling_DefaultRole \

--policy-arn arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceforAutoScalingRoleVerifying IAM Role Setup

After completing the steps, verify the roles exist:

aws iam list-roles | grep EMR

"RoleName": "AWSServiceRoleForEMRCleanup",

"Arn": "arn:aws:iam::402691950139:role/aws-service-role/elasticmapreduce.amazonaws.com/AWSServiceRoleForEMRCleanup",

"RoleName": "EMR_AutoScaling_DefaultRole",

"Arn": "arn:aws:iam::402691950139:role/EMR_AutoScaling_DefaultRole",

"RoleName": "EMR_DefaultRole",

"Arn": "arn:aws:iam::402691950139:role/EMR_DefaultRole",

"RoleName": "EMR_EC2_DefaultRole",

"Arn": "arn:aws:iam::402691950139:role/EMR_EC2_DefaultRole",To check attached policies:

aws iam list-attached-role-policies --role-name EMR_EC2_DefaultRole

{

"AttachedPolicies": [

{

"PolicyName": "AmazonElasticMapReduceforEC2Role",

"PolicyArn": "arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceforEC2Role"

}

]

}aws iam list-attached-role-policies --role-name EMR_DefaultRole

{

"AttachedPolicies": [

{

"PolicyName": "AmazonElasticMapReduceRole",

"PolicyArn": "arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceRole"

}

]

}aws iam list-attached-role-policies --role-name EMR_AutoScaling_DefaultRole

{

"AttachedPolicies": [

{

"PolicyName": "AmazonElasticMapReduceforAutoScalingRole",

"PolicyArn": "arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceforAutoScalingRole"

}

]

}Creating and Configuring an EMR Cluster

Once our IAM roles are set up, we can proceed with launching an Amazon EMR cluster. An EMR cluster is a distributed computing environment optimised for big data processing using Hadoop, Spark, and other frameworks.

Understanding EMR Cluster Components

When launching an EMR cluster, it’s important to understand its three types of nodes:

Master Node

- Coordinates the execution of the job.

- Manages resource allocation and task scheduling.

- Runs the Hadoop JobTracker (or Resource Manager in YARN).

- If the master node fails, the entire cluster becomes non-functional.

Core Nodes (Required)

- Perform actual computation (MapReduce tasks).

- Store data using HDFS (Hadoop Distributed File System).

- Must be at least 1 core node in the cluster.

Task Nodes

- Only perform computations (do not store data).

- Useful for dynamically adding more processing power without affecting data storage.

Choosing EMR Cluster Configuration

When launching an EMR cluster, we configure the following:

Instance Types

AWS EC2 instances power the EMR cluster. For example:

m5.xlarge(Recommended for balanced performance).r5.xlarge(If memory-intensive workloads are needed).c5.xlarge(If CPU-intensive processing is required).

Number of Instances

- Master Node: Always

1 - Core Nodes: Typically

2or more (performs actual computation). - Task Nodes: Optional, can be added for extra compute power.

Hadoop & Application Configuration

- EMR can be pre-configured with Hadoop, Spark, Hive, and other frameworks.

- For our MapReduce job, we only need Hadoop.

Logging

- AWS stores logs in Amazon S3 for later access.

- We define an S3 path for storing EMR logs (e.g.,

s3://big-data-bucket-02/logs/).

Launching the EMR Cluster

Once we finalise the above settings, we launch the cluster using the following AWS CLI command:

aws emr create-cluster \

--name "HadoopCluster" \

--release-label emr-6.5.0 \

--applications Name=Hadoop \

--log-uri s3://big-data-bucket-02/logs/ \

--ec2-attributes KeyName=mpi-key,InstanceProfile=EMR_EC2_DefaultRole \

--service-role EMR_DefaultRole \

--instance-groups InstanceGroupType=MASTER,InstanceCount=1,InstanceType=m5.xlarge \

InstanceGroupType=CORE,InstanceCount=2,InstanceType=m5.xlarge

{

"ClusterId": "j-282ORMDVCGVYM",

"ClusterArn": "arn:aws:elasticmapreduce:eu-west-2:402691950139:cluster/j-282ORMDVCGVYM"

}EMR cluster j-282ORMDVCGVYM has been successfully created. Now, let’s verify its status.

Check the Cluster Status

Run the following command to check the current state of your cluster:

aws emr describe-cluster --cluster-id j-282ORMDVCGVYM --query "Cluster.Status"

{

"State": "STARTING",

"StateChangeReason": {

"Message": "Provisioning Amazon EC2 capacity"

},

"Timeline": {

"CreationDateTime": "2025-02-24T21:42:08.881000+00:00"

}

}The EMR cluster j-282ORMDVCGVYM is currently in the STARTING state. It is configuring software. It is also setting up the required instances.

Monitor Cluster Readiness

Run the following command to continuously check its state until it moves to WAITING or RUNNING:

aws emr describe-cluster --cluster-id j-282ORMDVCGVYM --query "Cluster.Status"

{

"State": "WAITING",

"StateChangeReason": {

"Message": "Cluster ready to run steps."

},

"Timeline": {

"CreationDateTime": "2025-02-24T21:42:08.881000+00:00",

"ReadyDateTime": "2025-02-24T21:47:28.518000+00:00"

}

}Once the state changes to WAITING, let me know, and we’ll proceed with submitting the MapReduce job.

aws emr describe-cluster --cluster-id j-282ORMDVCGVYM --query "Cluster.Status"

{

"State": "WAITING",

"StateChangeReason": {

"Message": "Cluster ready to run steps."

},

"Timeline": {

"CreationDateTime": "2025-02-24T21:42:08.881000+00:00",

"ReadyDateTime": "2025-02-24T21:47:28.518000+00:00"

}

}EMR cluster j-282ORMDVCGVYM is now in the WAITING state, meaning it’s ready to execute jobs.

Submit the Hadoop MapReduce Job

Now, we will submit a Hadoop streaming job that processes the input file using your mapper.py and reducer.py scripts.

Run the following command:

STEP_ID=$(aws emr add-steps \

--cluster-id j-282ORMDVCGVYM \

--steps '[{

"Name": "HadoopStreaming",

"ActionOnFailure": "CONTINUE",

"Type": "CUSTOM_JAR",

"Jar": "command-runner.jar",

"Args": [

"hadoop-streaming",

"-files", "s3://big-data-bucket-02/mapper.py,s3://big-data-bucket-02/reducer.py",

"-mapper", "mapper.py",

"-reducer", "reducer.py",

"-input", "s3://big-data-bucket-02/input/",

"-output", "s3://big-data-bucket-02/output/"

]

}]' --query 'StepIds[0]' --output text)

echo "Step submitted with ID: $STEP_ID"

Step submitted with ID: s-0208068100355EOCVQ42The MapReduce job or the step s-0208068100355EOCVQ42 has been submitted. Now, let’s monitor its status.

Check the Step Status

Run the following command to check the status of the submitted job:

aws emr describe-step --cluster-id j-282ORMDVCGVYM --step-id s-0208068100355EOCVQ42 --query "Step.Status"

{

"State": "COMPLETED",

"StateChangeReason": {},

"Timeline": {

"CreationDateTime": "2025-02-24T21:49:46.273000+00:00",

"StartDateTime": "2025-02-24T21:50:06.309000+00:00",

"EndDateTime": "2025-02-24T21:50:54.795000+00:00"

}

}From the output above, it is evident that the MapReduce step has completed successfully.

Verify the Output

Now, let’s check if the output files were generated successfully in the S3 bucket. Run the following command:

aws s3 ls s3://big-data-bucket-02/output/

2025-02-24 21:50:50 0 _SUCCESS

2025-02-24 21:50:45 23 part-00000

2025-02-24 21:50:46 21 part-00001

2025-02-24 21:50:50 0 part-00002The output files have been successfully generated. Now, let’s download and inspect the results to verify the correctness.

Download the Output Files Locally

Run the following command to copy the output files from S3 to your local machine:

aws s3 cp s3://big-data-bucket-02/output/ ./output/ --recursive

download: s3://big-data-bucket-02/output/_SUCCESS to output/_SUCCESS

download: s3://big-data-bucket-02/output/part-00002 to output/part-00002

download: s3://big-data-bucket-02/output/part-00000 to output/part-00000

download: s3://big-data-bucket-02/output/part-00001 to output/part-00001Once the files are downloaded, list the contents:

ls -la output/

total 16

drwxrwxr-x 2 vivekb vivekb 4096 Feb 24 21:56 .

drwxrwxr-x 3 vivekb vivekb 4096 Feb 24 21:56 ..

-rw-rw-r-- 1 vivekb vivekb 23 Feb 24 21:50 part-00000

-rw-rw-r-- 1 vivekb vivekb 21 Feb 24 21:50 part-00001

-rw-rw-r-- 1 vivekb vivekb 0 Feb 24 21:50 part-00002

-rw-rw-r-- 1 vivekb vivekb 0 Feb 24 21:50 _SUCCESSYour MapReduce job successfully processed the input file. The word counts have been distributed across multiple output files. These files include part-00000, part-00001, and part-00002.

Part-00002 is empty. This means that there were not enough words in the dataset. They not be distributed among all reducers evenly.

Teardown Process

Let’s first understand the dependencies between different AWS resources involved in the EMR cluster setup. This helps us to properly remove them in the correct order.

- Terminate the EMR Cluster

- The cluster consists of EC2 instances. These instances must be stopped before deleting IAM roles and policies associated with them.

- Running clusters will continue to incur costs, so terminating them is the first priority.

- Verify EC2 Instance Termination

- Once the cluster is terminated, we must check whether all associated EC2 instances are stopped before proceeding further.

- Detach IAM Policies from Roles

- IAM roles are attached to the cluster. These roles have policies that grant necessary permissions. These permissions include accessing S3, launching EC2 instances, and managing logs.

- We need to detach these policies before deleting the roles.

- Remove IAM Roles

- Roles assigned to EMR and EC2 instances can not be deleted while they are associated with an instance profile.

- First, we must remove the role from the instance profile before deleting it.

- Delete IAM Instance Profiles

- EMR creates an Instance Profile, which acts as a wrapper for the IAM Role.

- The profile must be deleted before the role can be removed.

- Delete the S3 Bucket (if required)

- If you no longer need the bucket where input files, scripts, and logs are stored, it should be deleted.

- AWS does not allow empty buckets with versioning enabled to be deleted, so we must first empty the bucket.

Terminate the EMR Cluster

We first need to terminate the running cluster. This will stop all the associated EC2 instances.

~/AWS/BigData$ aws emr terminate-clusters --cluster-ids j-282ORMDVCGVYM

~/AWS/BigData$ To check the termination status, run:

aws emr describe-cluster --cluster-id j-282ORMDVCGVYM --query "Cluster.Status"

{

"State": "TERMINATING",

"StateChangeReason": {

"Code": "USER_REQUEST",

"Message": "Terminated by user request"

},

"Timeline": {

"CreationDateTime": "2025-02-24T21:42:08.881000+00:00",

"ReadyDateTime": "2025-02-24T21:47:28.518000+00:00"

}

}The EMR cluster is in the “TERMINATING” state, which means the shutdown process has started but is not yet complete.

Wait for the Termination to Complete. Run the following command periodically until the cluster’s State changes to "TERMINATED":

aws emr describe-cluster --cluster-id j-282ORMDVCGVYM --query "Cluster.Status"

{

"State": "TERMINATED",

"StateChangeReason": {

"Code": "USER_REQUEST",

"Message": "Terminated by user request"

},

"Timeline": {

"CreationDateTime": "2025-02-24T21:42:08.881000+00:00",

"ReadyDateTime": "2025-02-24T21:47:28.518000+00:00",

"EndDateTime": "2025-02-24T22:07:20.358000+00:00"

}

}Verify and Clean Up EC2 Instances

The EMR cluster is now terminated. Let’s check if there are any remaining EC2 instances that were part of this cluster.

Check for Running EC2 Instances

Run the following command:

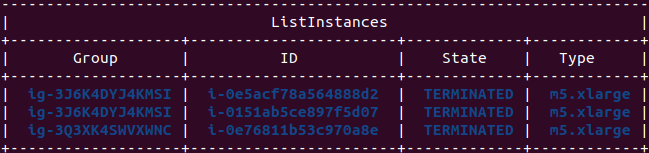

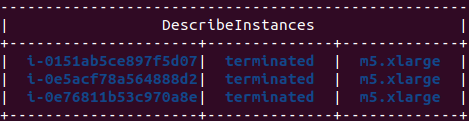

aws emr list-instances --cluster-id j-282ORMDVCGVYM --query "Instances[*].{ID:Ec2InstanceId, Type:InstanceType, State:Status.State, Group:InstanceGroupId}" --output table

Detach and Delete IAM Roles and Policies

All instances have been successfully terminated. Now, we focus on cleaning up IAM roles and policies associated with the EMR cluster.

List Attached Policies for Each Role

Check if any policies are still attached to the IAM roles:

$ aws iam list-attached-role-policies --role-name EMR_EC2_DefaultRole

{

"AttachedPolicies": [

{

"PolicyName": "AmazonElasticMapReduceforEC2Role",

"PolicyArn": "arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceforEC2Role"

}

]

}

$ aws iam list-attached-role-policies --role-name EMR_DefaultRole

{

"AttachedPolicies": [

{

"PolicyName": "AmazonElasticMapReduceRole",

"PolicyArn": "arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceRole"

}

]

}

$ aws iam list-attached-role-policies --role-name EMR_AutoScaling_DefaultRole

{

"AttachedPolicies": [

{

"PolicyName": "AmazonElasticMapReduceforAutoScalingRole",

"PolicyArn": "arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceforAutoScalingRole"

}

]

}Detach Policies from IAM Roles

Now that we have confirmed policies are still attached to the roles, let’s detach them.

Run the following commands to detach the policies from the respective roles:

$ aws iam detach-role-policy --role-name EMR_EC2_DefaultRole \

--policy-arn arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceforEC2Role

$ aws iam detach-role-policy --role-name EMR_DefaultRole \

--policy-arn arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceRole

$ aws iam detach-role-policy --role-name EMR_AutoScaling_DefaultRole \

--policy-arn arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceforAutoScalingRole

Verify That IAM Policies Have Been Detached

Now, let’s confirm that all policies have been successfully detached from the roles.

Run the following commands:

aws iam list-attached-role-policies --role-name EMR_EC2_DefaultRole

{

"AttachedPolicies": []

}

$ aws iam list-attached-role-policies --role-name EMR_DefaultRole

{

"AttachedPolicies": []

}

$ aws iam list-attached-role-policies --role-name EMR_AutoScaling_DefaultRole

{

"AttachedPolicies": []

}Removing IAM Roles Properly

IAM Roles cannot be deleted if they are still associated with an Instance Profile.

Check the Existing Instance Profiles

Before we proceed, let’s list all instance profiles:

aws iam list-instance-profiles --query "InstanceProfiles[*].InstanceProfileName"

[

"DemoRoleForEC2",

"EMR_EC2_DefaultRole",

"S3ReadOnly"

]Now, let’s go ahead and remove the EMR_EC2_DefaultRole instance profile before deleting the role.

Check if the Instance Profile Contains a Role

Run the following command to check if the EMR_EC2_DefaultRole instance profile is still associated with a role:

aws iam get-instance-profile --instance-profile-name EMR_EC2_DefaultRole

{

"InstanceProfile": {

"Path": "/",

"InstanceProfileName": "EMR_EC2_DefaultRole",

"InstanceProfileId": "AIPAV3QSPQY55JS75N3NL",

"Arn": "arn:aws:iam::402691950139:instance-profile/EMR_EC2_DefaultRole",

"CreateDate": "2025-02-24T21:13:22+00:00",

"Roles": [

{

"Path": "/",

"RoleName": "EMR_EC2_DefaultRole",

"RoleId": "AROAV3QSPQY5VJD75B34G",

"Arn": "arn:aws:iam::402691950139:role/EMR_EC2_DefaultRole",

"CreateDate": "2025-02-24T21:12:10+00:00",

"AssumeRolePolicyDocument": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

}

],

"Tags": []

}

}Remove the Role from the Instance Profile

Since the EMR_EC2_DefaultRole instance profile still contains the role, we must detach it first before deleting anything.

Run the following command:

aws iam remove-role-from-instance-profile --instance-profile-name EMR_EC2_DefaultRole --role-name EMR_EC2_DefaultRoleVerify the removal by running:

aws iam get-instance-profile --instance-profile-name EMR_EC2_DefaultRole

{

"InstanceProfile": {

"Path": "/",

"InstanceProfileName": "EMR_EC2_DefaultRole",

"InstanceProfileId": "AIPAV3QSPQY55JS75N3NL",

"Arn": "arn:aws:iam::402691950139:instance-profile/EMR_EC2_DefaultRole",

"CreateDate": "2025-02-24T21:13:22+00:00",

"Roles": [],

"Tags": []

}

}Delete the Instance Profile

Since the EMR_EC2_DefaultRole no longer contains any roles, you can safely delete it:

aws iam delete-instance-profile --instance-profile-name EMR_EC2_DefaultRoleTo verify that it is deleted, run:

aws iam list-instance-profiles --query "InstanceProfiles[*].InstanceProfileName"

[

"DemoRoleForEC2",

"S3ReadOnly"

]Since the “EMR_EC2_DefaultRole” instance profile is no longer listed, that means it has been successfully deleted.

Delete the IAM Roles

Since all policies have been detached, we can now proceed with deleting the IAM roles.

Verify Remaining EMR Roles

Run the following command to check if any EMR roles still exist:

aws iam list-roles --query "Roles[*].RoleName" | grep -i EMR

"AWSServiceRoleForEMRCleanup",

"EMR_AutoScaling_DefaultRole",

"EMR_DefaultRole",

"EMR_EC2_DefaultRole"Verify Remaining IAM Roles

Run this command to check if any EMR-related roles still exist:

aws iam list-roles --query "Roles[*].RoleName" | grep -i EMR

[

"AWSServiceRoleForEMRCleanup",

"EMR_AutoScaling_DefaultRole",

"EMR_DefaultRole",

"EMR_EC2_DefaultRole"

]Since three EMR roles still exist, we need to detach policies and delete them.

Detach Policies from EMR Roles

AWS does not allow direct role deletion if policies are still attached.

Run the following commands to detach policies:

aws iam list-attached-role-policies --role-name EMR_EC2_DefaultRoleIf any policies are listed, detach them:

aws iam detach-role-policy --role-name EMR_EC2_DefaultRole --policy-arn arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceforEC2RoleRepeat for other roles:

aws iam detach-role-policy --role-name EMR_DefaultRole --policy-arn arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceRole

aws iam detach-role-policy --role-name EMR_AutoScaling_DefaultRole --policy-arn arn:aws:iam::aws:policy/service-role/AmazonElasticMapReduceforAutoScalingRoleVerify that all policies are detached:

aws iam list-attached-role-policies --role-name EMR_EC2_DefaultRole

{

"AttachedPolicies": []

}Delete IAM Roles

Once policies are detached, delete the roles:

aws iam delete-role --role-name EMR_EC2_DefaultRole

aws iam delete-role --role-name EMR_DefaultRole

aws iam delete-role --role-name EMR_AutoScaling_DefaultRoleConfirm Role Deletion

Run the following command to confirm that all EMR roles have been deleted:

aws iam list-roles --query "Roles[*].RoleName" | grep -i EMRCheck for any active EC2 instances:

aws ec2 describe-instances --query "Reservations[*].Instances[*].[InstanceId, State.Name, InstanceType]" --output table

By following this step-by-step guide, we have successfully set up an AWS EMR Hadoop cluster. We executed the cluster. Then, we terminated the cluster after running MapReduce jobs.

Leave a Reply